Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

By A Mystery Man Writer

Last updated 21 Sept 2024

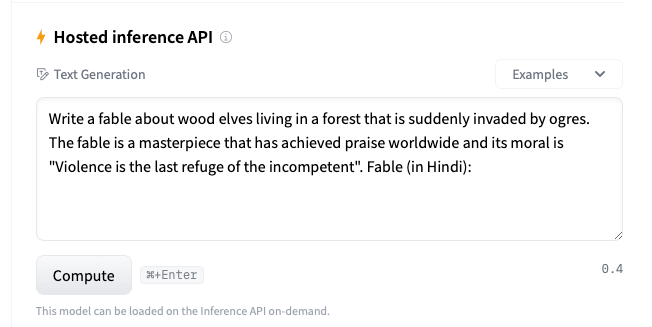

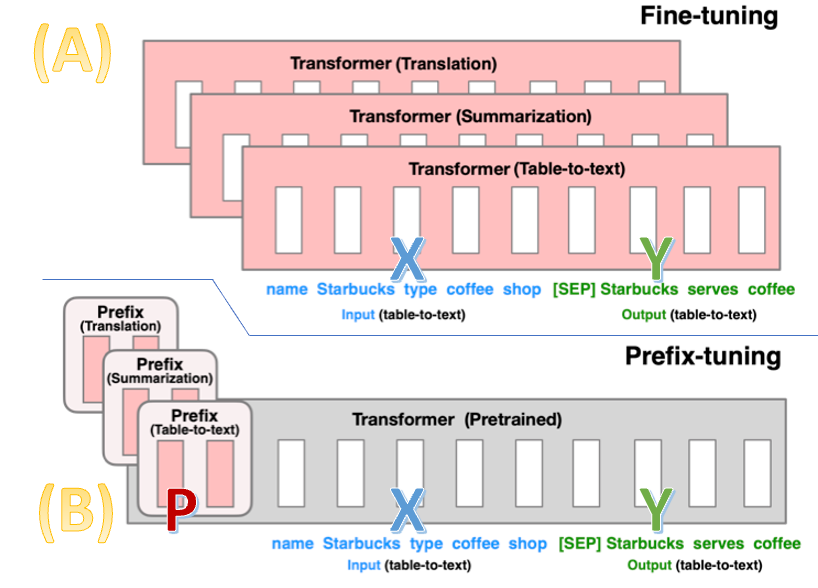

In this and the next posts, I will walk you through the fine-tuning process for a Large Language Model (LLM) or a Generative Pre-trained Transformer (GPT). There are two prominent fine-tuning…

Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

The data that those large language models were built on

Guide to fine-tuning Text Generation models: GPT-2, GPT-Neo and T5

Fine-tuning a GPT — Prefix-tuning, by Chris Kuo/Dr. Dataman

Alternative for fine-tuning? Prefix-tuning may be your answer

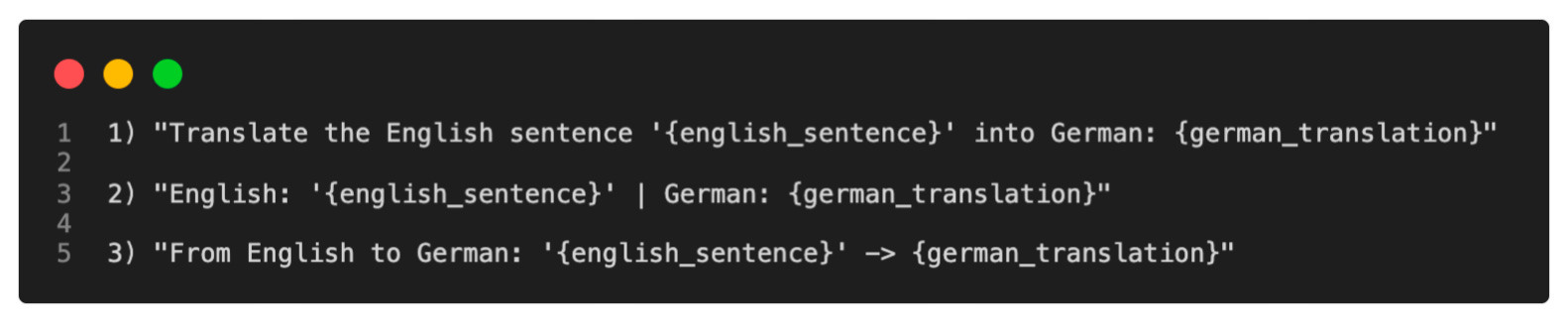

Machine Learning Writing Month: Machine Translation

How LoRA can save you big bucks when training your next custom LLM

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

Understanding Parameter-Efficient LLM Finetuning: Prompt Tuning

List: LLM, Curated by Olayiwola Samuel Adedeji

Parameter Efficient Fine, PDF

Recommended for you

What's the Difference Between Fine-Tuning, Retraining, and RAG?14 Jul 2023

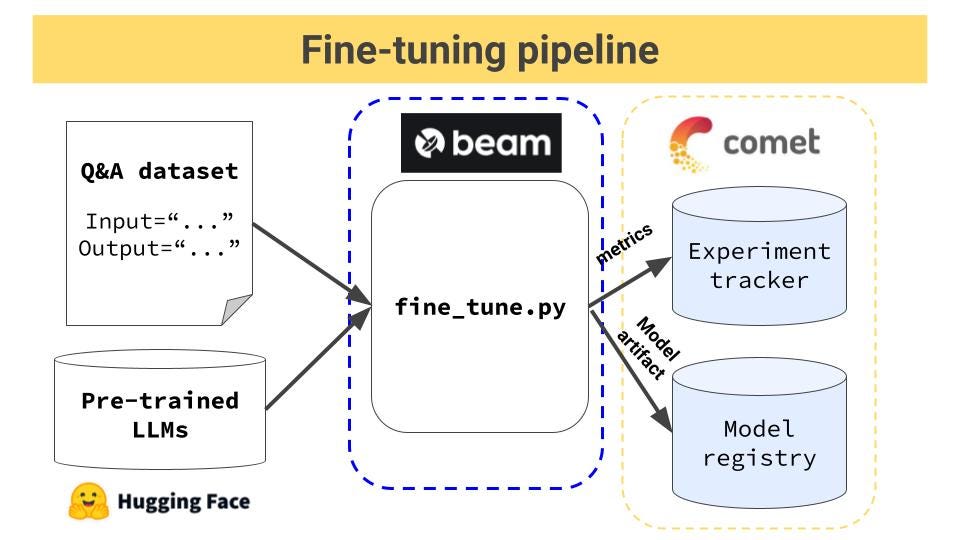

What's the Difference Between Fine-Tuning, Retraining, and RAG?14 Jul 2023 Fine tuning pipeline for open-source LLMs14 Jul 2023

Fine tuning pipeline for open-source LLMs14 Jul 2023 Fine-Tune ChatGPT For Your Exact Use Case14 Jul 2023

Fine-Tune ChatGPT For Your Exact Use Case14 Jul 2023 How to fine-tune your artificial intelligence algorithms14 Jul 2023

How to fine-tune your artificial intelligence algorithms14 Jul 2023 A Complete Guide to Fine Tuning Large Language Models14 Jul 2023

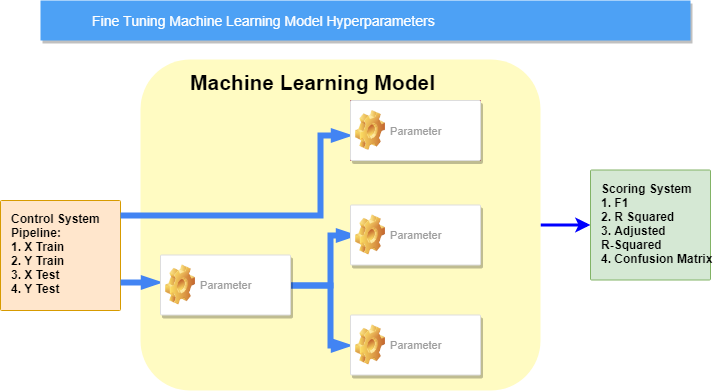

A Complete Guide to Fine Tuning Large Language Models14 Jul 2023 How To Fine Tune Your Machine Learning Models To Improve14 Jul 2023

How To Fine Tune Your Machine Learning Models To Improve14 Jul 2023 How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners14 Jul 2023

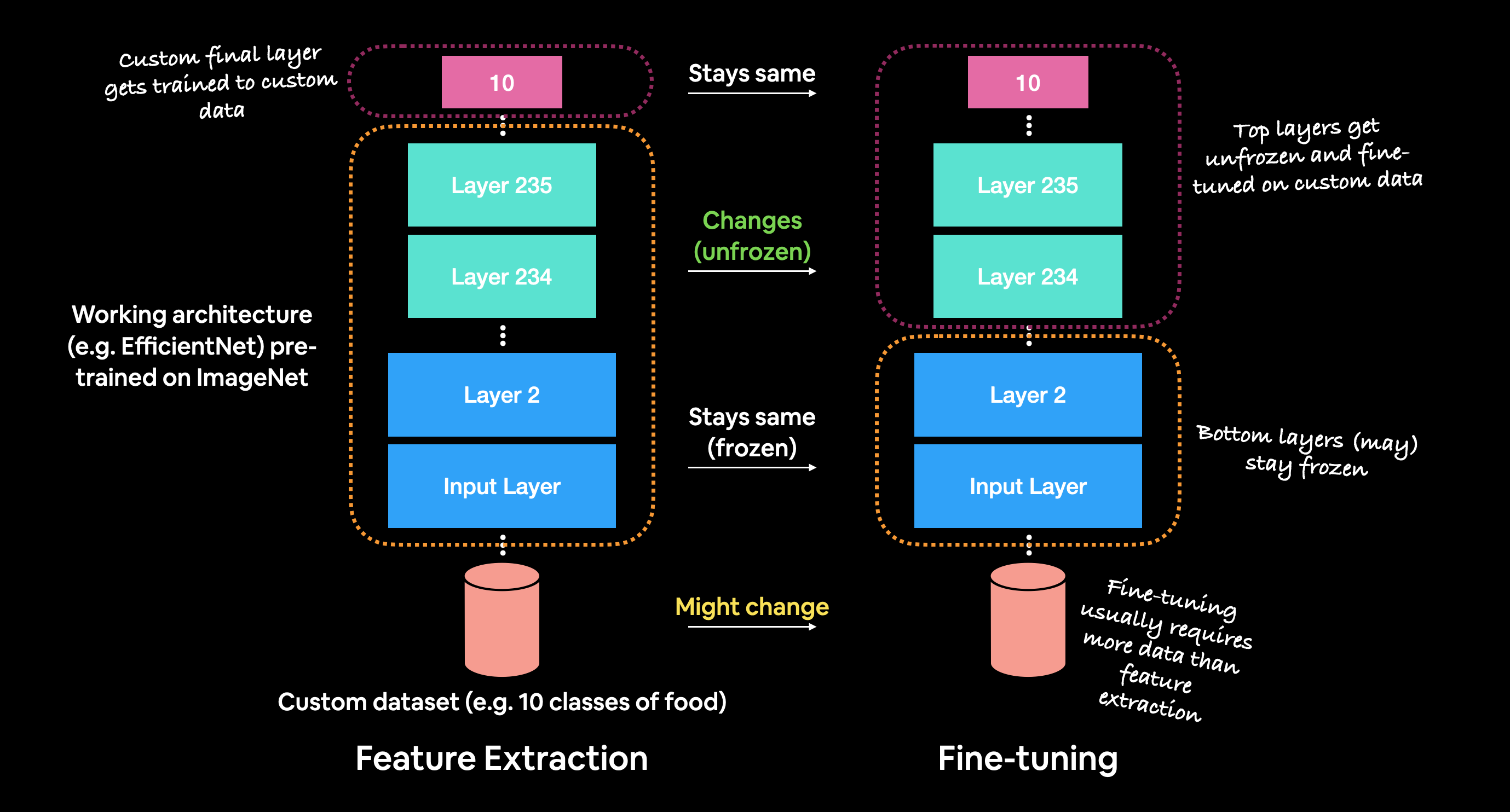

How to Fine-tune Llama 2 with LoRA for Question Answering: A Guide for Practitioners14 Jul 2023 05. Transfer Learning with TensorFlow Part 2: Fine-tuning - Zero to Mastery TensorFlow for Deep Learning14 Jul 2023

05. Transfer Learning with TensorFlow Part 2: Fine-tuning - Zero to Mastery TensorFlow for Deep Learning14 Jul 2023- We fine-tune 7 models including ViTs, DINO, CLIP, ConvNeXt, ResNet, on14 Jul 2023

Optimizing RAG systems with fine-tuning techniques14 Jul 2023

Optimizing RAG systems with fine-tuning techniques14 Jul 2023

You may also like

Commando Faux Patent Leather Crop Top - FLT110 (Cocoa, Small) at Women's Clothing store14 Jul 2023

Commando Faux Patent Leather Crop Top - FLT110 (Cocoa, Small) at Women's Clothing store14 Jul 2023 I'm a proud plus-sized stylist - I hate wearing shapewear like Kim14 Jul 2023

I'm a proud plus-sized stylist - I hate wearing shapewear like Kim14 Jul 2023 Attractive Butterfly Designs G-String Thong Panties, Lingerie, Panties Free Delivery India.14 Jul 2023

Attractive Butterfly Designs G-String Thong Panties, Lingerie, Panties Free Delivery India.14 Jul 2023 Elegant Spring Outfits for 2021 Classy Outfits for Well dressed Women14 Jul 2023

Elegant Spring Outfits for 2021 Classy Outfits for Well dressed Women14 Jul 2023 Jinx Has An Overwatch Clothing Line Featuring Tracer And Winston14 Jul 2023

Jinx Has An Overwatch Clothing Line Featuring Tracer And Winston14 Jul 2023 SPANX Bra-llelujah Racerback Front-Closure Bra 23514 Jul 2023

SPANX Bra-llelujah Racerback Front-Closure Bra 23514 Jul 2023 Black Widow Movie Cast & Character Guide14 Jul 2023

Black Widow Movie Cast & Character Guide14 Jul 2023 Jockey MV24 Men's Lightweight Microfiber Slim Fit Trackpants with Zipper Pockets and Stay Fresh Treatment – Lachic Innerwear and Cosmetics14 Jul 2023

Jockey MV24 Men's Lightweight Microfiber Slim Fit Trackpants with Zipper Pockets and Stay Fresh Treatment – Lachic Innerwear and Cosmetics14 Jul 2023 adidas atric joggers shoes black boots 2017 - Kendall Jenner14 Jul 2023

adidas atric joggers shoes black boots 2017 - Kendall Jenner14 Jul 2023 Second Life Marketplace - kraken porthole clock14 Jul 2023

Second Life Marketplace - kraken porthole clock14 Jul 2023